pastpage, an LLM proof of concept

Have you ever found yourself blocked during work, trying to research the issue that is blocking you, opening dozens of web pages, multiple slightly different Stack Overflow and GitHub issues?

You scan pages after pages, only to realise that you need to revisit one of the pages from that 15 Stack Overflow or 20 GitHub issues, but since they were almost the same it’s challenging to find the exact one you are looking for. Maybe you remember something from the context of the problem the author was facing, but nothing concrete.

Or maybe some day you will want to to find this very article that you are reading now and the only thing that you can remember about it was that I am using some obscure Linux distribution but you cannot remember its name (and let’s assume you are an avid reader of personal blogs, with dozens of them in your history). Either way, the name or title of the page is not really meaningful across all of the pages you had visited, and that is what will end up in your browser history.

This is something I have encountered many times now, and started thinking: could a local LLM based solution help here? If it can, is this the best way to solve this problem? Not sure, but this is a not too terrible use-case, and it’s not like I am building a startup based on this lazy/hype-driven assumption, so what can go wrong with trying it out and see what it’s like to use local LLMs in action, and also what it’s like to build something that is running locally on my laptop, and not on some remote Kubernetes cluster.

I’ve been doing infrastructure related work all my life, and the closest I get to build something like a “desktop app” is my

nixos-config.

Just realised that most of the time I even self-host the Kubernetes clusters and run them locally as well. Never mind, you get my point.

Let’s build it!

By the way, can an LLM semantically recognise that NixOS was that obscure Linux distribution? Maybe. But if it can’t then this line might help.

Building a MVP TTB (Thing That Builds)

I started out by searching for similar solutions out there. I was expecting to find lots of similar projects, but either all search engines are going downhill (yes they are), or this is not something many people considered doing before for some reason.

Eventually, I’ve found this great blog, which is quite close to what I also wanted to implement.

I was able to reverse/augment-engineer the solution described there, and had a working example in less than two hours, which is quite nice.

Few words on my LLM background. I have never used ChatGPT, or any of the big players, but I like to read about local LLM projects, and I have some idea about the basics of some of the related concepts e.g. temperature, embeddings, vector DBs, etc. but that’s about it. My only practical experience was trying out LocalAI, and adding

ConversationBufferMemoryinto the very earlyprivateGPT.pyafter it came out.

I started out by adding SQLite Manager extension to my Firefox, dropped my places.sqlite from /Users/krisztianfekete/Library/Application Support/Firefox/Profiles/ into the extension, then executed the following query:

SELECT url from "moz_places" LIMIT 10

Then, I exported this into a csv file, and used that so I have a fast feedback loop during development.

This far this is very similar to what the original blog describes and I was following the ideas described there until I had a working example.

I also made a short-cut and started out only with text/html pages only, used txtai, qdrant, and even bottle to get the thing to run, and since the blog I was using was easy enough to follow, shortly I had a working implementation under 100 LOC!

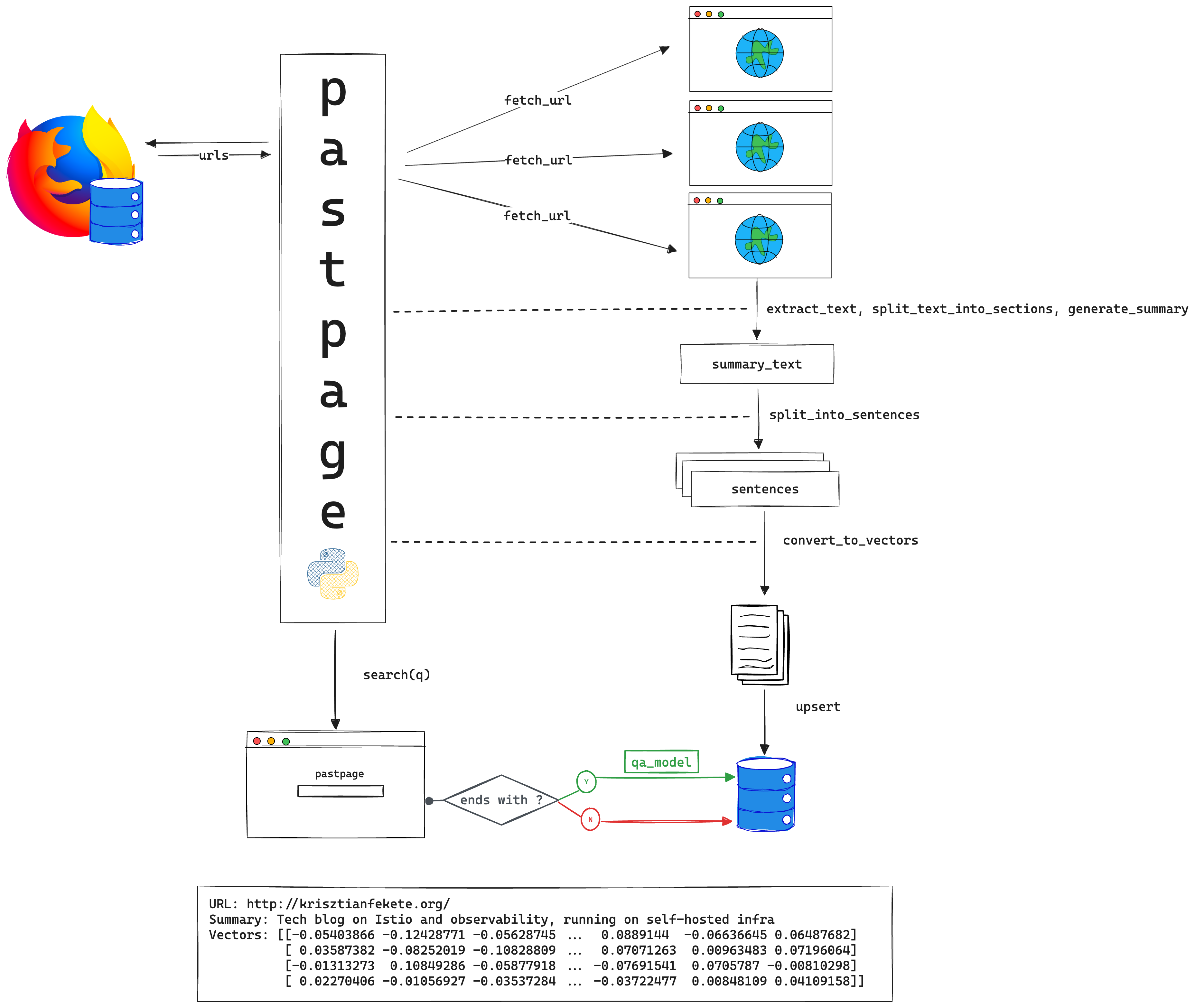

Then, I’ve made some modifications, and switched to this workflow:

- extract text from the web sites

- split the text into sections with some overlap (hoping to get better semantic context this way)

- generate summaries from the sections

- split the summaries into sentences

- create embeddings (generate vectors) from the sentences

- upsert the vectors into the vector database (we will query the database)

I don’t want to get into explaining the theory behind everything here, I am not an AI pro, and there are already lots of papers and articles out there on what embeddings are.

As you might expect from a naive approach like this, the quality of the summaries will largely depend on the text extracted from the web sites.

You also have to take the way you are planning to use the application into consideration to make certain decisions during development. Let’s say I know that most of the time I will probably just enter a few search terms. In this case, it’s probably better to create embeddings from sentences rather than from sections or paragraphs.

More advanced chunking techniques could be also beneficial e.g. paying attention to code blocks, or to the type of the content itself. There’s clearly a difference processing a text-only dump from a blog hosted via a static site generator, and doing the same with a dump of a Hacker News thread without any promise for consistency of style or content, or both.

Browser integration and adding QA capabilities

browser-history

I have zero experience building browser extensions (although that UX could be definitely better for this project), so I decided to run this locally, maybe in a container. I also wanted to make the integration with the browser more seamless, so I looked into how can I solve this with Python.

I settled with using browser-history, which is a zero-dependency, cross-platform Python package that supports most of the browsers. Exactly what I needed.

My only problem with this package was that it’s not part of nixpkgs, and I am developing on NixOS, so I had to add it to my flake.nix like this:

...

browser-history = pkgs.python310Packages.buildPythonApplication rec {

pname = "browser-history";

version = "0.4.0";

src = pkgs.python310Packages.fetchPypi {

inherit pname version;

# nix-prefetch-url --unpack https://files.pythonhosted.org/packages/source/b/browser-history/browser-history-0.4.0.tar.gz

sha256 = "sha256-qumfjwUbH1oDsNm37bgyneyB1g5dHR+TMv0buvlIPz8=";

};

...

pythonTools = with pkgs.python310Packages; [

pip

virtualenv

txtai # This is adding something already part of python310Packages

browser-history # And this is adding my custom package I prepared above, it's the same!

...

];

...

Pulling the web sites from Firefox via browser-history was straightforward, I could easily sort them as well, so the app starts processing the websites I’ve visited the last.

To keep the feedback loop fast during development I added a --last flag. With this, now I can specify the --last 5m/10h/20d time window from my history to be able to work with less data.

If someone doesn’t want to run the tool continuously, they can just spin it up at 5pm in the afternoon with the corresponding flags to search all the web sites visited that day.

I was/am also playing with the thought of adding a --session mode, so you can proactively start a research session right before you expect to be go trough lots of web pages, os you can keep the history cleaner. I am still not sure if this is something that anyone would ever use, maybe this is just another feature that sounds good during development, but not very useful in practice.

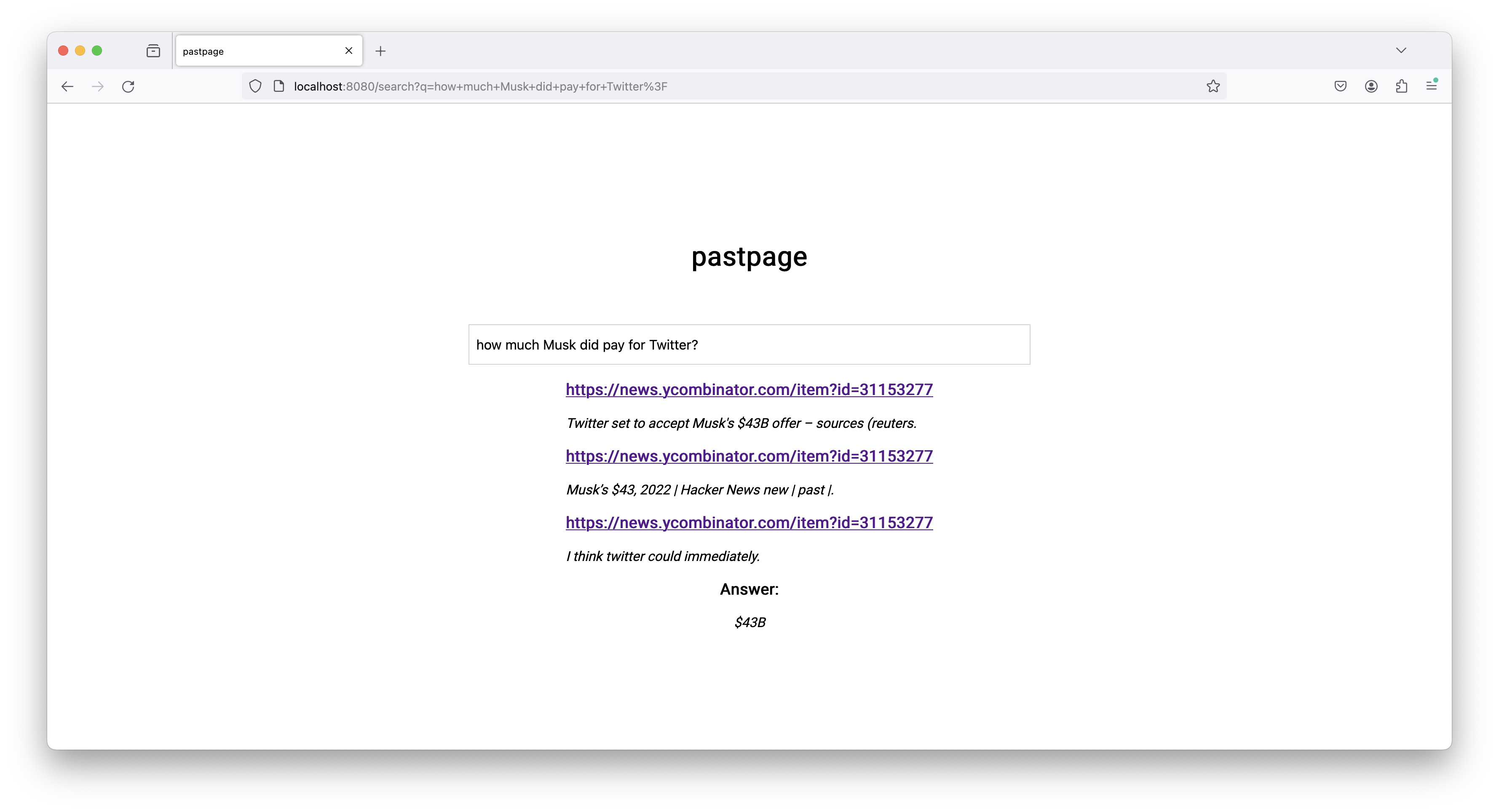

This is what it looks like in action.

QA model

Another feature I wanted to add was the ability to ask questions from your browser history. I am not talking about having a conversation with it (at least initially), but I think being able to ask a question, and get a (potentitally) useful answer can be fun.

I decided not to add a dedicated ASK button, mainly because I don’t even have a SEARCH button to begin with (I like simplicity), so I came up with the following UX.

You have a search bar, you type something in it, and normally it will give you results from the vector database. BUT, if you put a ? to the end, you are turning it into a question and that will trigger a QA model and it will try to answer your question.

Currently, I still display the regular search results regardless of the question mark, as I don’t always had great results with the model’s answer only.

This is my simplified code that implements this functionality:

# Check if the search term is a question

if q.endswith('?'):

# Use the question answering model to generate a response

with qa_ans_duration.labels(model=qa_model_name).time():

result = qa_model(question=q, context=context)

answer = result['answer']

hits = query(q)

template = environment.get_template("index.html")

args = {

"args": {"title": "pastpage"},

"description": "Search your browser history",

"hits": hits,

"query": q,

"answer": answer,

}

content = template.render(**args)

return content

else:

# Use standard search functionality

hits = query(q)

template = environment.get_template("index.html")

args = {

"args": {"title": "pastpage"},

"description": "Search your browser history",

"hits": hits,

"query": q,

}

content = template.render(**args)

return content

Evaluating various LLM models

If you take a closer look at the code snippet above, you can see that I instrumented qa_model pipeline, so I have a Prometheus Histogram metric to provide insights on how much time it takes to get an answer from the model.

I also added a label called model, to be able to compare the performance of various models by populating the value of this label with the name of the model I am currently using.

I did the same with the summary generation logic as well.

This is one of the simples and cheapest way to get this information, so I recommend following this practice to make it easier to reason about the performance differences when you are playing with various models, context sizes, and chunking strategies.

Comparing the performance of models is easier than comparing their quality. Similarly to the processing of the input, different types of content can benefit from different models.

Let’s take sshleifer/distilbart-cnn-12-6 as an example. This model was trained on the CNN Dailymail Dataset, which is “an English-language dataset containing just over 300k unique news articles as written by journalists at CNN and the Daily Mail”. This should work great for generic news web sites, but might fall sort on a more technical content.

Or take pszemraj/led-large-book-summary as another example. This one “covers source documents from the literature domain, such as novels, plays and stories, and includes highly abstractive, human written summaries on three levels of granularity of increasing difficulty: paragraph-, chapter-, and book-level”.

One funny story about using this model was that it called me Konstantin quite reliably while processing my own blog. Well, that’s an option for a pen name, I guess.

What’s next?

Here’s what I have now. It’s not an architecture diagram, more like a visual guide to understand the current flow.

It was fun putting this together, but there are lots of ways to improve the current implementation.

Without providing an extensive list, I think these are the most interesting ways to go from here:

- Turning it into a browser extension and/or migrating to Transformers.js

- Implementing a more sophisticated text extraction/processing logic

Feel free to reach out if you have any suggestions, questions, or just feedback!