Upgrading to Istio 1.10

Preface

This was the first production Istio upgrade of this blog. I was running 1.9.3, which was the latest version when I started this project.

Until this point, I had an uptime of 77d, without any issues.

root@k3d-large:~# kubectl get all -n istio-system

NAME READY STATUS RESTARTS AGE

pod/istiod-654769fb5d-dhmgg 1/1 Running 0 77d

pod/istio-ingressgateway-5985b6f5d4-wstd5 1/1 Running 0 77d

pod/svclb-istio-ingressgateway-klstv 5/5 Running 0 77d

pod/prometheus-f6fbcfc95-cxx42 2/2 Running 0 77d

Overall, the upgrade was smooth sailing, I only had some temporary issues due to resource limitations (I am running all this on a surprisingly small instance).

Upgrade notes, changes

There are networking improvements introduced from this minor version: the proxies are no longer redirecting traffic to lo but forwarding it to eth0 interface.

This change has a detailed explanation on one of the official blog posts, and it’s mentioned in the upgrade notes as well.

There are changes related to the logic of sidecar injection, and .global stub domain is now deprecated due to ISTIO-SECURITY-2021-006 which affected multiple versions of 1.8 and 1.9 earlier.

Make sure to check these points out, if you are running a cluster with any the following versions: 1.8.x, 1.9.0-1.9.5, and 1.10.0-1.10.1. Prior 1.10.2, Istio contains a remotely exploitable vulnerability if all of those conditions are true.

In-place upgrade with Helmfile

Traditionally, I am performing in-place upgrades, originally by passing my custom profile.yaml to istioctl, then making the adjustments by hand, if needed.

However since all my Istio configurations are in a helmfile for this project, I can just bump the version tags, execute helmfile apply, and my control-plane starts to upgrade.

The diff of an upgrade from 1.9.3 to 1.10.3 looks like this:

--- a/helmfile.yaml

+++ b/helmfile.yaml

@@ -4,11 +4,11 @@ repositories:

- name: istio

- url: git+https://github.com/istio/istio@manifests/charts?sparse=0&ref=1.9.3

+ url: git+https://github.com/istio/istio@manifests/charts?sparse=0&ref=1.10.3

- name: istio-control

- url: git+https://github.com/istio/istio@manifests/charts/istio-control?sparse=0&ref=1.9.3

+ url: git+https://github.com/istio/istio@manifests/charts/istio-control?sparse=0&ref=1.10.3

- name: istio-gateways

- url: git+https://github.com/istio/istio@manifests/charts/gateways?sparse=0&ref=1.9.3

+ url: git+https://github.com/istio/istio@manifests/charts/gateways?sparse=0&ref=1.10.3

releases:

@@ -58,7 +58,7 @@ releases:

values:

- global:

hub: docker.io/istio

- tag: 1.9.3

+ tag: 1.10.3

- pilot:

resources:

requests:

@@ -78,7 +78,7 @@ releases:

values:

- global:

hub: docker.io/istio

- tag: 1.9.3

+ tag: 1.10.3

Then, behind the scenes, the Helm charts will take care of the changes, for example:

...

containers:

- name: discovery

- image: "docker.io/istio/pilot:1.9.3"

+ image: "docker.io/istio/pilot:1.10.3"

args:

...

...

+

istio-system, tcp-metadata-exchange-1.8, EnvoyFilter (networking.istio.io) has been removed:

- # Source: istio-discovery/templates/telemetryv2_1.8.yaml

- apiVersion: networking.istio.io/v1alpha3

- kind: EnvoyFilter

- metadata:

- name: tcp-metadata-exchange-1.8

- namespace: istio-system

...

...

+

istio-system, metadata-exchange-1.10, EnvoyFilter (networking.istio.io) has been added:

-

+ # Source: istio-discovery/templates/telemetryv2_1.10.yaml

+ # Note: metadata exchange filter is wasm enabled only in sidecars.

+ apiVersion: networking.istio.io/v1alpha3

+ kind: EnvoyFilter

+ metadata:

+ name: metadata-exchange-1.10

+ namespace: istio-system

...

Performance

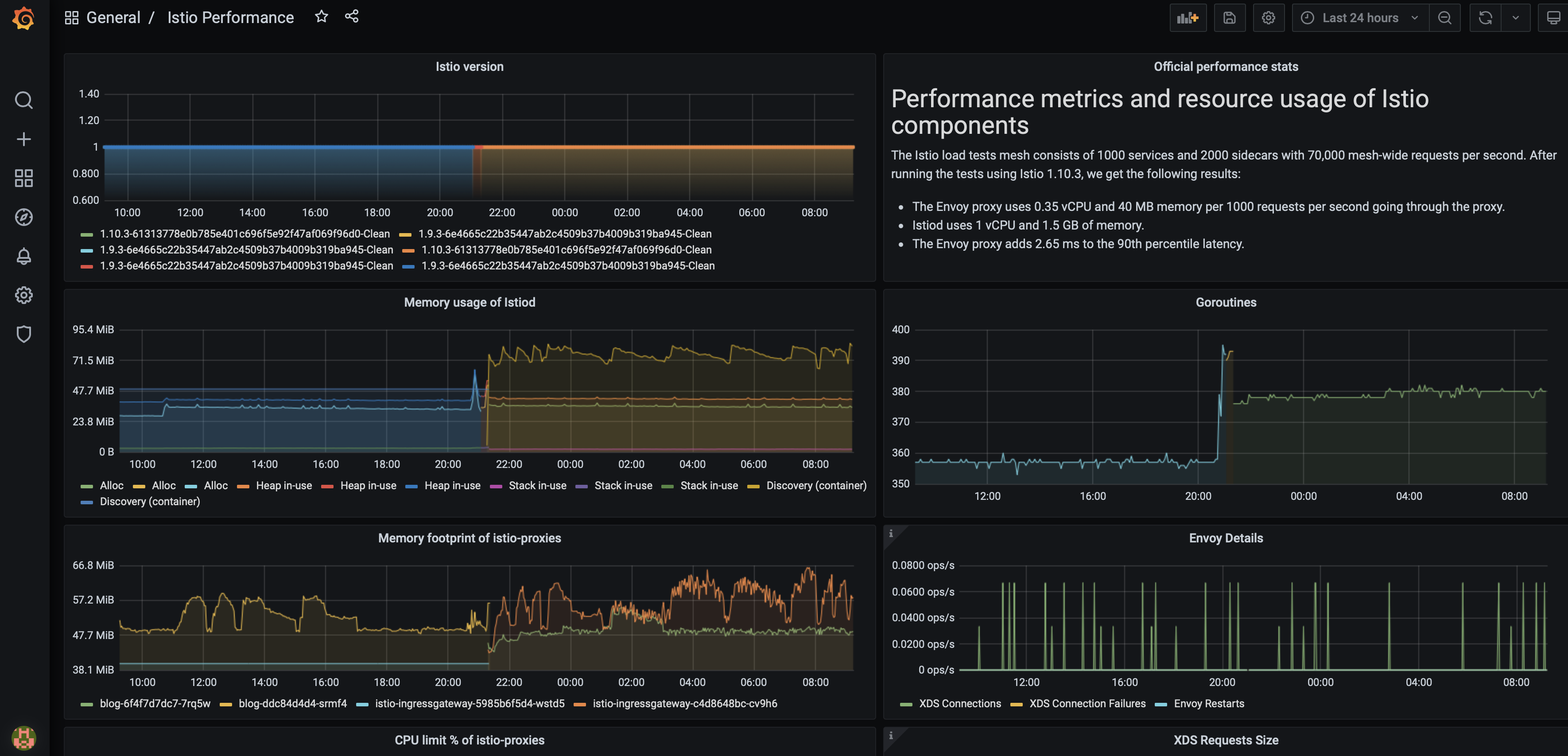

Istio 1.9.x made significant improvements regarding memory usage by dropping the footprint of the proxies by 20-30%. This trend may or may not continue with 1.10.x versions.

While I can see reduced memory usage at the data-plane level to some extent, I cannot really see it at the side of the control-plane.

We can see a decrease on the blog pods, however, notice that I had an almost flat line for ingressgw, which now became a bit unstable.

Let’s take a look at the control-plane.

Here, Alloc, Heap-in-use are almost the same, Stack-in-use is decreased by ~30%, but the memory usage of Discovery container is increased by around the same.

There’s an open issue on GitHub with the same experience, trying to find the root cause of the issue.

There was a change in go runtimes between 1.9.x and 1.10.x, and all the components are now running on 1.16, which might be related to the change in metrics.

Be advised of this before upgrading, but otherwise, the upgrade itself was trouble-free.