Upgrading to Istio 1.11

Preface

The second upgrade of the blog. It was uneventful, and that is a good thing.

I am working with Istio at work as well, and this far the upgrades to 1.10 and to 1.11 were preceding the upgrades at work. Configuration and traffic/load is different of course, but there’s a chance that I can catch bugs by piloting an upgrade in my production environment first.

Note: as I am working in a team, I am not always the one performing those upgrades, but I try to be involved and monitor the performance after the upgrades took place.

My plan is to revisit these posts from time to time, and add the experience of upgrading those larger service meshes.

Upgrade notes, changes

After browsing through the upgrade notes for 1.11, no high-risk change stood out.

The istiodRemote component now simplifies remote cluster installation, there’s an EnvoyFilter semantics change, and the host header fallback is disabled for all inbound metrics.

Let’s take a closer look at the last one.

Until 1.11, host header fallback was only disabled for Gateway traffic. Now it’s disabled for every inbound traffic. What does host fallback mean?

Istio metrics have a label, called destination_service and if Envoy cannot determine it by itself, it defaults to the host header.

Clients tend to use multiple host headers, so this can lead to high-cardinality.

Having this functionality disabled will potentially decrease the load on your Prometheus instances by avoiding metrics creation on all these headers.

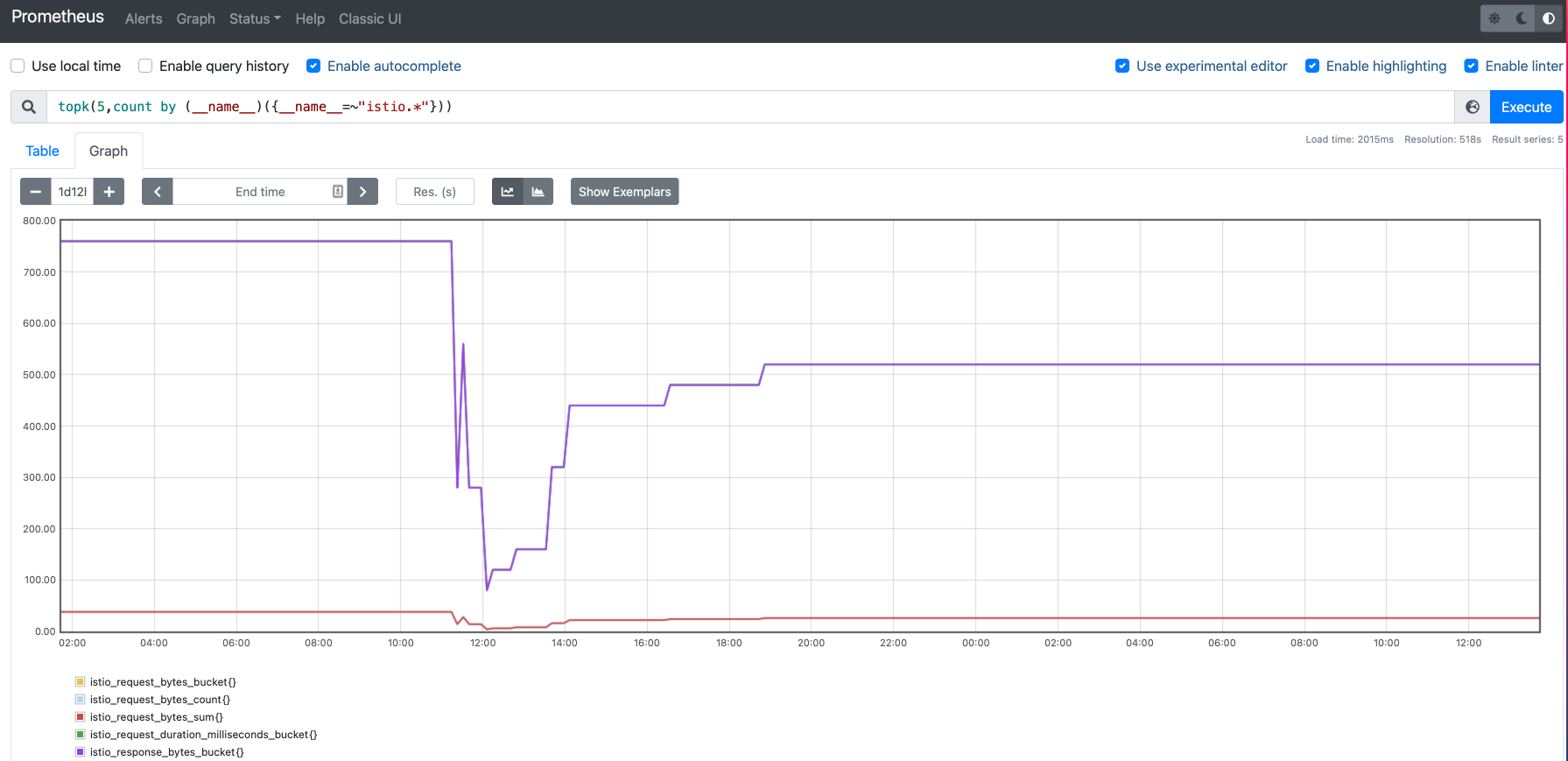

I have this drop in Istio metrics.

You can use this query to get the top 5 time series starting with istio.*.

topk(5, count by (__name__)({__name__=~"istio.*"}))

It’s a nice little improvement, especially if you are not using other metrics optimizations e.g. recording rules.

Performance

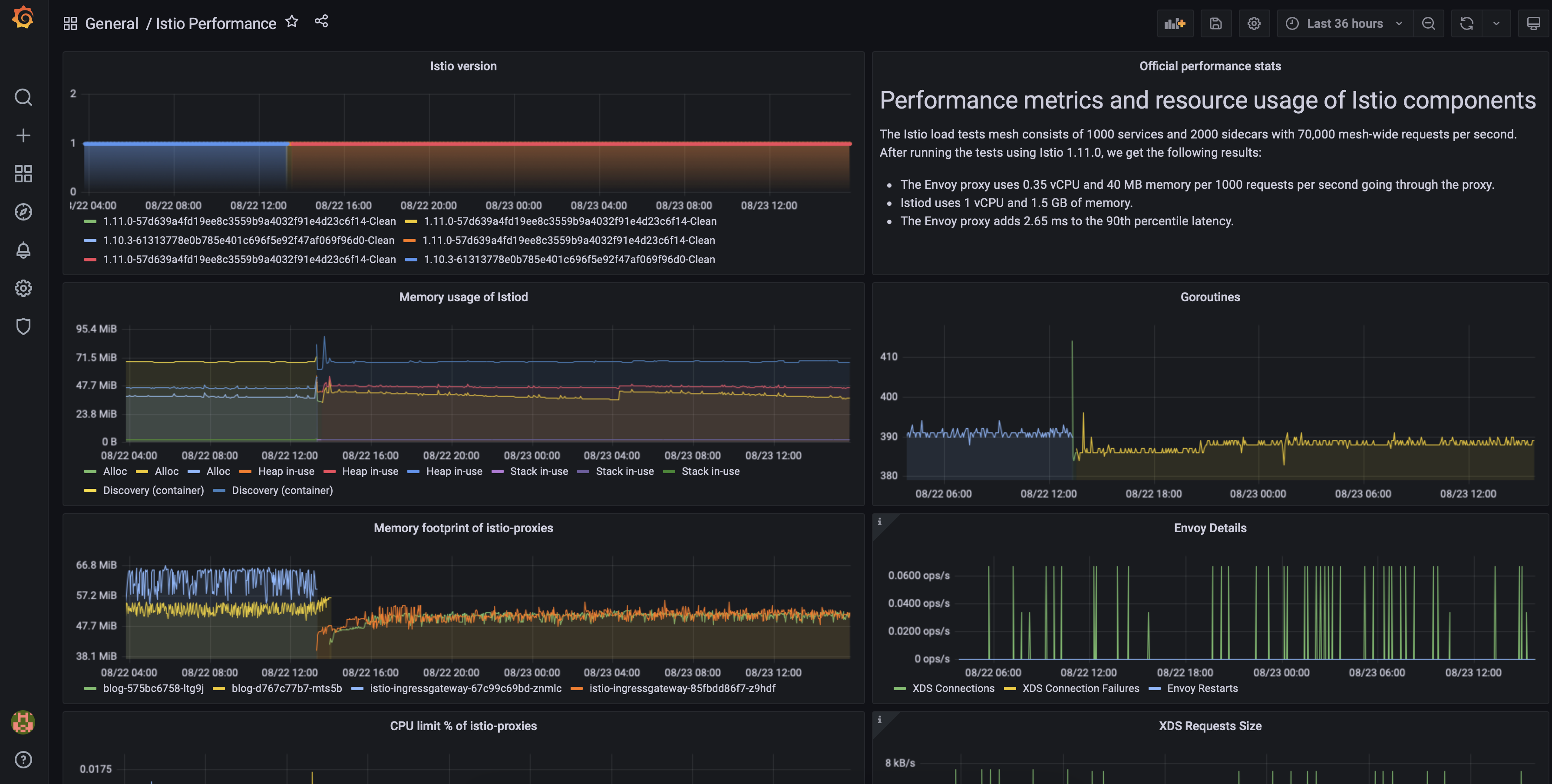

After the wild rides of release 1.10, the memory usage of Envoy proxies seems to have stabilised a bit.

This query will give you the memory usage of istio-proxy containers per injected pods.

sum(container_memory_working_set_bytes{container="istio-proxy"}) by (pod)

It’s still a bit hectic, but the footprint is lower, and the spikes are smaller, so we are heading in a good direction.

Istiod’s memory and CPU usage are roughly the same, so it looks like the increase of Discovery container is here to stay.

Here’s a snapshot of my Istio Performance dashboard that I am using after upgrades.