Upgrading to Istio 1.12, rethinking ops

Preface

It took a while, but this blog is now running on Istio 1.12.1.

The upgrade itself was smooth as usual, but this time I took the time to rethink the way I am testing and performing these upgrades and cleaned up technical debts.

If you are only interested in the actual upgrade, then you can jump to the section called The actual upgrade. If you are also interested in the re-imagined operational details, keep reading!

Rethinking ops

As per improvements, I now have:

- a Terraform module to spin up a Hetzner instance with K3s installed

- environments (ondemand, production) defined in

helmfile.yaml - a

blog.ondemand.yamlmanifests matching PROD in 100%

Yes, that yaml file is not that great. It could be packed into a Helm chart and managed just like any other application in the platform. I am only not doing that because I am still exploring ways to separate infrastructure and apps while finding balance. Until that I will punk away with this solution.

Let’s say, I want to upgrade to the latest Istio. What I do not want is canary/revision-based upgrades and risking live issues in production. How can I test the new release safely while avoiding both of these?

With the aforementioned tooling in place, I can spawn an on-demand replica of my production setup, expose it on a subdomain, and test the upgrade there first. I can even test a clean install this way, just as easily.

All this takes approximately 1 minute, including the time until all the pods are ready.

# Provisioning the instance and deploying k3d

terraform apply

16.078s

# Deploying Istio

helmfile --environment ondemand sync

34.585s

# Deploying the application

kubectl apply -f blog.ondemand.yaml

1.020s

Note: For ondemand, I am using a single master, single node K3d “cluster” using a

cx31instance, which costs me € 0.0140/h.

Without this efficient way of recreating the exact replica of the blog, I would probably always try new things out in PROD first.

The actual upgrade

Migration to the new chart and repository

Due to the changes to the Helm installation method, I decided to migrate to the new Istio Gateway chart (I was using these before), and also clean up my repositories.

Before:

repositories:

- name: istio

url: git+https://github.com/istio/istio@manifests/charts?sparse=0&ref=1.11.4

- name: istio-control

url: git+https://github.com/istio/istio@manifests/charts/istio-control?sparse=0&ref=1.11.4

- name: istio-gateways

url: git+https://github.com/istio/istio@manifests/charts/gateways?sparse=0&ref=1.11.4

After:

repositories:

- name: istio

url: https://istio-release.storage.googleapis.com/charts

I can manage my Istio charts like this:

releases:

- name: istio-base

chart: istio/base

version: 1.12.1

...

- name: istio-ingress

chart: istio/gateway

version: 1.12.1

...

I wanted to migrate my existing mesh without downtime, so I had to use the existing chart names to make this as seamless as possible.

However, there are a few things you should be aware of when migrating to the new chart.

As you can see above, my Ingress Gateway is called istio-ingress, so I am affected and it would break my installation.

The guide above suggests renaming it, and even offers an example:

helm upgrade istio-ingress manifests/charts/gateway /

--set name=istio-ingressgateway /

--set labels.app=istio-ingressgateway /

--set labels.istio=ingressgateway /

-n istio-system

Let’s say, you would want to keep the name of the existing release. What can you do in this case?

You can hack the chart’s helper partial, because this is where the removal of the istio prefix happens:

istio: {{ include "gateway.name" . | trimPrefix "istio-" }}

Or, you can handle this at Istio’s end. Istio has this function, which is also fallbacking to istio-ingressgateway by default.

func getIngressGatewaySelector(ingressSelector, ingressService string) map[string]string {

// Setup the selector for the gateway

if ingressSelector != "" {

// If explicitly defined, use this one

return labels.Instance{constants.IstioLabel: ingressSelector}

} else if ingressService != "istio-ingressgateway" && ingressService != "" {

// Otherwise, we will use the ingress service as the default. It is common for the selector and service

// to be the same, so this removes the need for two configurations

// However, if its istio-ingressgateway we need to use the old values for backwards compatibility

return labels.Instance{constants.IstioLabel: ingressService}

} else {

// If we have neither an explicitly defined ingressSelector or ingressService then use a selector

// pointing to the ingressgateway from the default installation

return labels.Instance{constants.IstioLabel: constants.IstioIngressLabelValue}

}

}

These variables can be configured via parameters that are documented here.

Adding them is as simple as passing the correct values to istiod’s chart, like this:

meshConfig:

ingressSelector: ingress

ingressService: istio-ingress

Upgrade notes, changes

In Istio 1.12, there’s a new API, which can make WebAssembly plugins easier to manage via a new extension, WasmPlugin, and the recently introduced Telemetry API also improved.

It’s a nice improvement that Envoy can finally track the active connections, so it can quit before waiting for the drain duration.

Another useful change is that now you can use TCP probes as they will work similarly to HTTP probes. Earlier these always resulted successful tests due to the sidecars always accepting the connections.

There’s another change that is affecting revision-based upgrades, but that is not relevant to my use case.

Performance

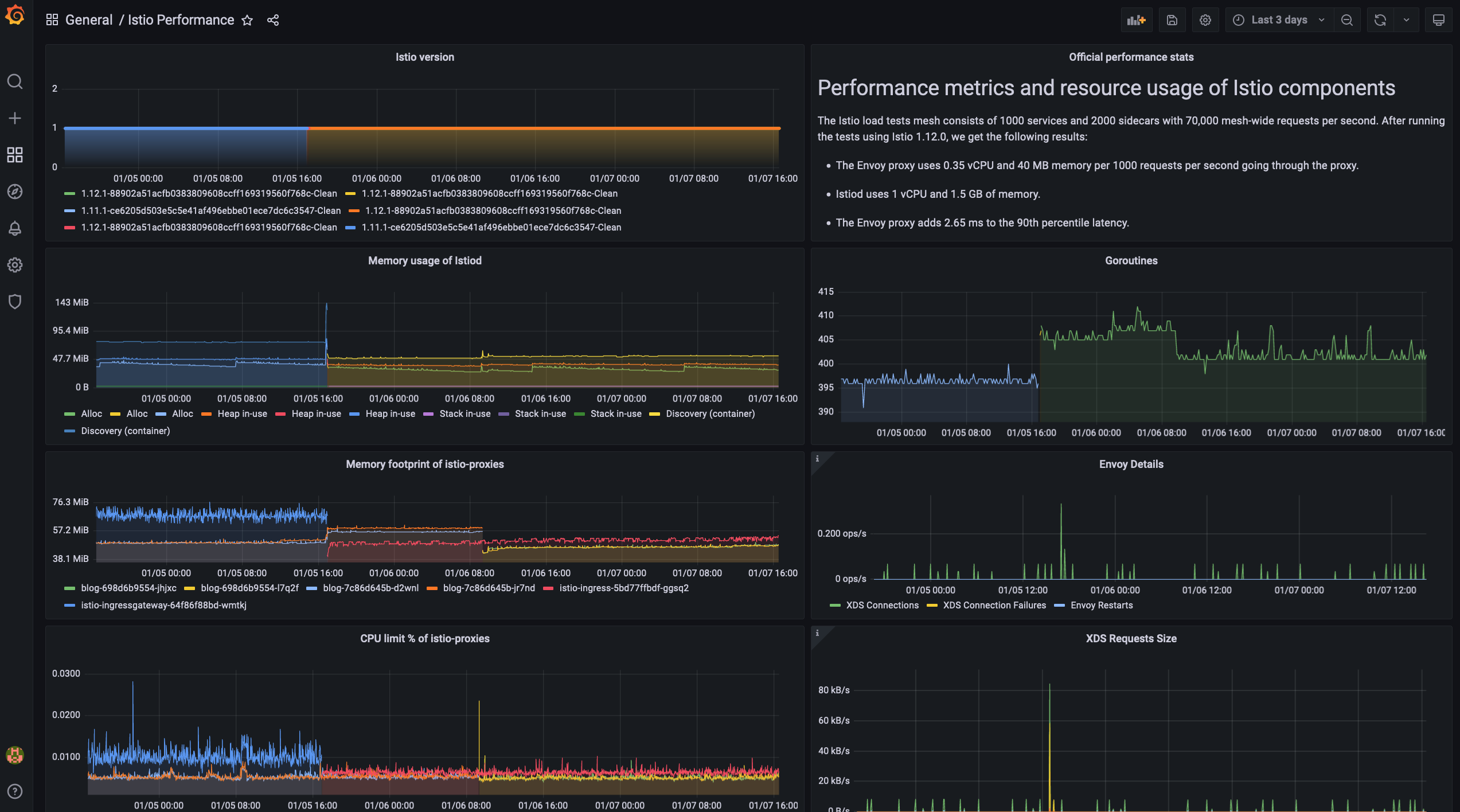

Regarding performance, this is another great release.

My Discovery container’s memory consumption was decreased by >35%, and the sidecars are using >20% less memory as well.

The CPU usage is also decreased by ~50%, and it is less spike-y now.

Here’s the usual performance dashboard:

The rolling restart of the workloads happened in the morning after the upgrade, hence the two inflection point in characteristics.